Blade Runner 2049 misses mark on artificial intelligence: U of T experts

Published: October 23, 2017

The portrayal of artificial intelligence in Blade Runner 2049 takes on a nuance absent in the original film. In addition to the advanced intelligence of the replicants – human-seeming biological machines – there is also the holographic character Joi, a logical extension of today’s digital assistants such as Apple’s Siri, Microsoft’s Cortana or Amazon’s Alexa.

Aside from these two depictions, AI is largely relegated to the background. It’s performing simple tasks such as running machines, and autonomously piloting spinners (Blade Runner’s flying cars). It’s also dynamically altering the environments that people inhabit, causing light to move with them as they walk through beautiful and brutal constructed spaces.

In all of these forms, the film makes a key distinction between human and artificial intelligence: Only people are capable of creativity – AI is mechanical.

As researchers interested in digital automation and expertise, we can say that Blade Runner 2049’s depiction of AI as mundane belies today’s reality and the history of the field.

Recent examples have shown computers have started to become active participants in creative work such as the field of design, collaborating with humans to shape the objects and experiences that fill our daily lives. Moreover, there is also a long history around computation and creativity that needs to be addressed.

Generative design

The newly emerging field known as “generative design” seeks to incorporate the computer more actively into the design process. In these kinds of computer applications, the designer uploads a “seed geometry” – akin to a keyframe reference drawing an animator might use – and then sets a series of requirements ranging from the aesthetic to the functional.

The software then searches through a series of procedurally generated designs based on the seed geometry and surfaces those that meet the requirements. The designer can select one of these “solutions” for production or to function as the next seed geometry and the process begins again.

The Elbo chair is a recent example of a successful use of generative design that has garnered a great deal of attention. Created in the summer of 2016 by Autodesk researchers Brittany Presten and Arthur Harsuvanakit, the chair was a part of a project examining how to best incorporate generative design tools into traditional computer-aided design (CAD) software.

A chair was selected for this project, according to Harsuvanakit, because of the surprising complexity of the object. A “good” chair not only has to be aesthetically pleasing, but it also needs to be comfortable and support body weight. There is also the issue of “produceability” – regardless of how well a solution meets the other requirements, that would mean little if it was not feasible to actually fabricate the chair.

The requirements Presten and Harsuvanakit ultimately settled upon were that the chair was to be produced using wood and a CNC router – a computer-controlled cutting machine. Functionally, the chair would need to bear a weight of up to 300 pounds and the seat would be 18 inches off the ground.

Aesthetically, Dutch mid-century modern was selected for the chair’s style, and a seed geometry was created for the chair that paid homage to several chairs from this school of design. The name of the chair itself is a reference to Hans J. Wegner’s design icon, Elbow Chair.

The final results are striking. With its seemingly strange blend of Danish and almost organic influences – apt considering that generative design is often likened to the evolutionary processes – the Elbo chair also uses 18 per cent less material and bears less stress on its joints compared to the seed geometry.

News about the achievement focused on how Dreamcatcher, the generative design application used to create the Elbo chair, heralds the new creative capabilities of computers. Harsuvanakit and Presten delegated much of the decision-making process to computational processes, using algorithms to replace some of the aesthetic, material, and construction decisions typically made by humans.

While this story may seem overwhelmingly modern, use of computers in creative work dates back to early days of computation.

Computer-written western

On Oct. 26, 1960, CBS aired an hour-long special entitled The Thinking Machine. Focused on the rise of digital computers and the potential of artificial intelligence, the special also included three short television dramas that had been written by a computer.

The program that “authored” these three western playlets was called SAGA II. It was developed for the TX-0 computer by Douglas Ross and Harrison Morse of the Massachusetts Institute of Technology’s Electronic Systems Laboratory. Ross said while they had a great deal of fun developing the program, their underlying intent was not levity, but to demonstrate the potential for computers to engage in “creative” acts.

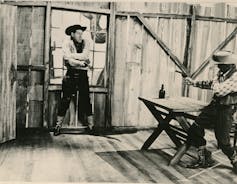

In the first playlet, a shootout leaves a bank robber mortally wounded. After taking one final drink, the bandit dies, leaving the sheriff to reclaim the money and walk off into the proverbial sunset.

The second playlet is almost identical to the first, but this time the sheriff is mortally wounded instead, leaving the bandit free to escape with his ill-gotten gains. Upon seeing this second iteration, the host of the special, actor David Wayne, said: “Well, I can see that there is one thing the computer doesn’t know – in television the bad guy is supposed to lose.”

Wayne’s statement highlights key differences between the Elbo chair system and Saga II: Whereas both programs are creative in the sense that they are both producing something novel, the newer Autodesk Dreamcatcher system adds discriminative capacities to the mix.

Empowered machines a transformative technology

SAGA II can at best be described as a blunt instrument of the creative process. Each time the program was run, a new western would be generated. It was then up to the researcher and producers to determine the quality of the script that was produced.

Conversely, Dreamcatcher generated large volumes of possible designs for the Elbo chair but only those that met the parameters established by Presten and Harsuvanakit were shown to the user. As such, one can say that Dreamcatcher was actively considering what made a good design.

There are certainly lessons to be learned by exploring the history of CAD as it relates to our current understanding of creative work, but the generative design demonstrated by the Elbo chair also requires us to come to terms with issues around discrimination.

This newfound capacity for determining quality that makes this generative design unique unto itself, and requires us to consider how the line between human expertise and computational systems is changing.

Similar developments in medicine demonstrate the capacity of deep learning systems to diagnose cancer. Venture capitalist Vinod Khosla has said that he “can’t imagine why a human oncologist would add value.” Venture capitalists like Khosla see these new computational capacities as increasing the economic abundance and quality of life of humans.

Yet the question remains: What do we lose when we task computational systems with discriminating on our behalf? Other researchers working in the field of oncology have noted ways in which clinical diagnoses serve as a site for explaining how cancer works. These explanations often lead to new medical breakthroughs.

AI researcher Geoffrey Hinton of the Vector Institute in Toronto has similarly noted that “A deep-learning system doesn’t have any explanatory power.” This has obvious ramifications for medicine.

The impact of these kinds of computational systems in the future is unknown. What is certain however, is that both what it means to be creative, and the roles humans and computers play in that process, are changing.

Daniel Southwick is a PhD candidate at U of T. Matt Ratto is an associate professor in U of T's Faculty of Information.

This article was originally published on The Conversation. Read the original article.